"... not at significance"

June 2019

What kind of reporting should we do for a test that’s currently live? Should we note a variation that looks like it might win? Or do we have to ignore all the data until the test concludes?

What’s wrong with reporting on variations mid-test

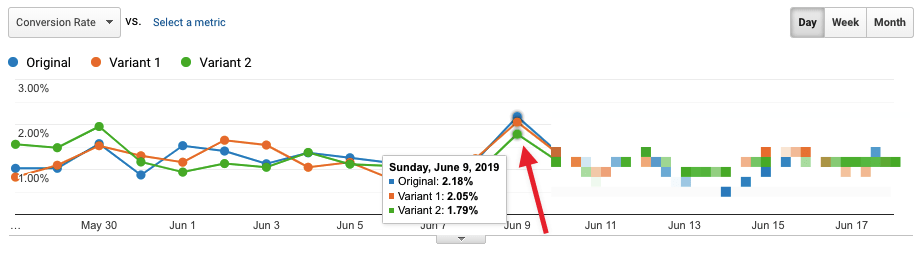

It’ll probably make a liar out of you. Check out these test results:

Never mind the data to the right 😁. On May 30, we can clearly see that Variant 2 is in the lead. In fact, it’s showing a 25% lift over the Original experience … not at significance.

Here are the same results, a week or so later:

Looks like we were wrong. Variant 2 is now showing an 18% drop in conversions … not at significance.

Here are the full results:

After 3 weeks, all three experiences have roughly the same conversion rate.

In this case, it was to be expected - this was actually an A/A/A test. But a real test will have data that’s just as noisy, or noisier. What if we’d told the team, or the client, or the CEO, that we had a winner 2 days in? Or that we had a loser a week later? We’d come off looking as unstable as our conversion rate.

What to report on instead

While a test is running, there are only a few things that you, or anyone else, needs to know about it:

- Are metrics tracking correctly (for all variations)?

- How many visitors and total conversions have we measured?

- When do we expect to end the test?

Put this info in your updates, and leave it at that. Shift focus to the next test, or another source of insights.

This will protect your audience from the confusion and emotional upheaval that noisy web data can cause. It will also protect you from questions like “Why don’t we just call it now?”

Admittedly, it is way boring compared to turning yourself into a racetrack announcer and delivering a play-by-play of each variation’s performance. Sorry. It’s for the best. Your team needs you to demonstrate discipline. I know you can do it ᕦ(ò_óˇ)ᕤ